This is a follow-up from our October, 2022 post — Sensors and Benign Scanner Activity

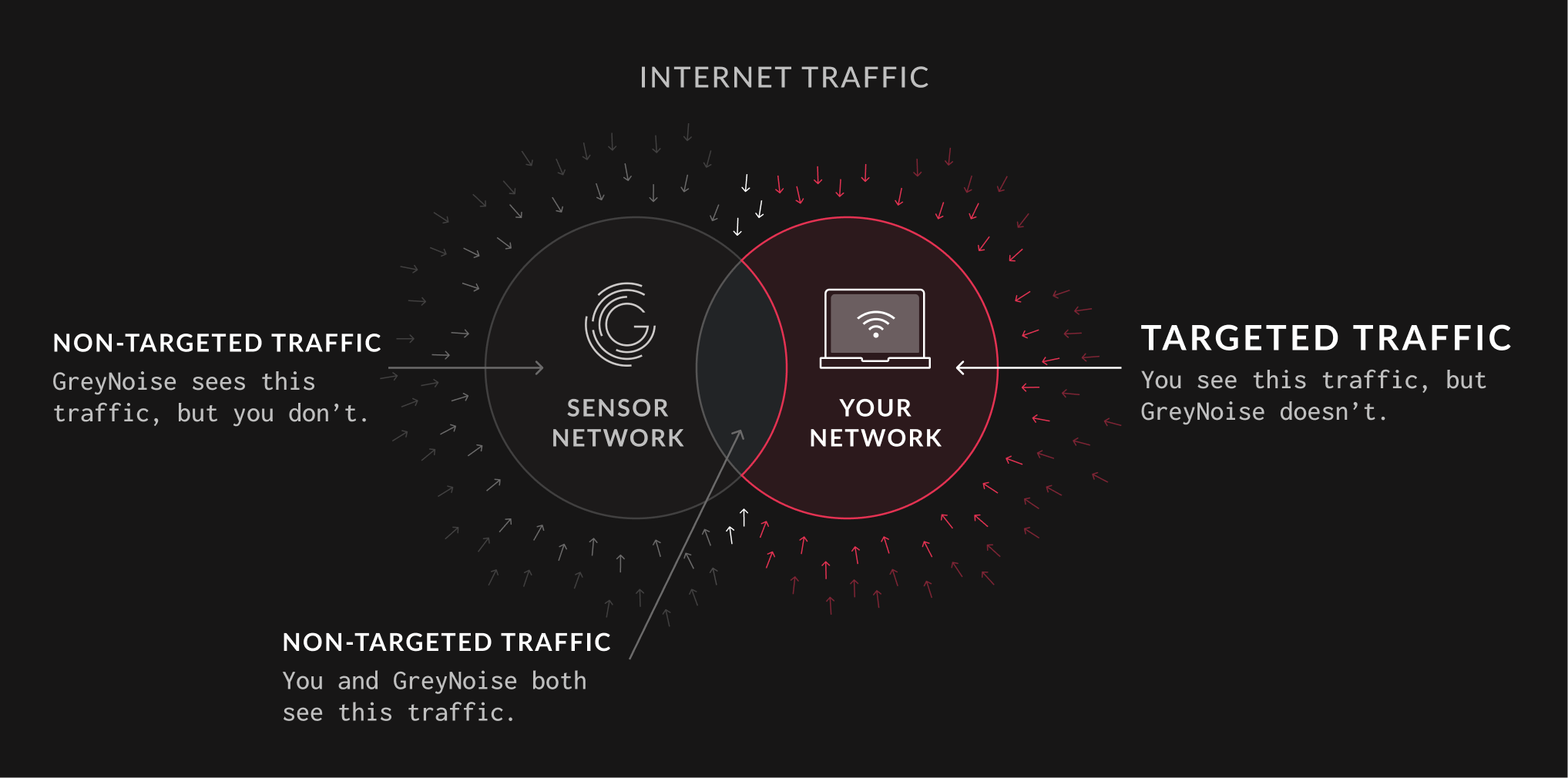

Throughout the year, GreyNoise tends to focus quite a bit on the “naughty” connections coming our way. After all, that’s how we classify IP addresses as malicious so organizations can perform incident triage at light speed, avoid alert fatigue, and get a leg up on opportunistic attackers by using our IP-based block-lists.

At this time of year, we usually take some time to don our Santa hats and review the activities of the “nice” (a.k.a., “benign”) sources that make contact with our fleet.

Scanning the entire internet now drives both cybersecurity attack strategies and defense tactics. Every day, multiple legitimate organizations perform mass scanning of IPv4 space to gather data about exposed services, vulnerabilities, and general internet health. In November 2024, we deployed 24 new GreyNoise sensors across diverse network locations to study the behavior and patterns of these benign scanners.

Why This Matters

When organizations deploy new internet-facing assets, they typically experience a flood of inbound connection attempts within minutes. While many security teams focus on malicious actors, understanding benign scanning activity is equally crucial for several reasons:

- These scans generate significant amounts of log data that can obscure actual threats

- Security teams waste valuable time investigating legitimate scanning activity

- Benign scanners often discover and report vulnerable systems before malicious actors

The Experiment

We positioned 24 freshly baked sensors across five separate autonomous systems and eight distinct geographies and began collecting data on connection attempts from known benign scanning services. We narrowed the focus down to the top ten actors with the most tags in November. The analyzed services included major players in the internet scanning space, such as Shodan, Censys, and BinaryEdge, along with newer entrants like CriminalIP and Alpha Strike Labs.

Today, we’ll examine these services' scanning patterns, protocols, and behaviors when they encounter new internet-facing assets. Understanding these patterns helps security teams better differentiate between routine internet background noise and potentially malicious reconnaissance activity. There’s a “Methodology” section at the tail end of this post if you want the gory details of how the sausage was made.

The Results

We’ll first take a look at the fleet size of the in-scope benign scanners.

The chart below plots the number of observed IP addresses from each organization for the entire month of November vs. the total tagged interactions from those sources (as explained in the Methodology section). Take note of the tiny presence of both Academy for Internet Research and BLEXBot, as you won’t see them again in any chart. While they made the cut for the month, they also made no effort to scan the sensors used in this study.

As we’ll see, scanner fleet size does not necessarily guarantee nimbleness or completeness when it comes to surveying services on the internet.

Contact Has Been Made

The internet scanner/attack surface management (ASM) space is pretty competitive. One area where speed makes a difference is how quickly new nodes are added to the various inventories. All benign scanners save for ONYPHE (~9 minutes) and CriminalIP (~17 minutes) hit at least one of the target sensors within five minutes of the sensor coming online.

BinaryEdge and ONYPHE display similar dense clustering patterns, with significant activity bursts occurring around the 1-week mark. Their sensor networks appear to capture a high volume of unique IP contacts, forming distinctive cone-shaped distributions that suggest systematic scanning behavior.

Censys and Bitsight exhibit comparable behavioral patterns, though Bitsight’s first contacts appear more concentrated in recent timeframes. This could indicate a more aggressive or efficient scanning methodology for discovering new hosts.

ShadowServer shows a more dispersed pattern of first contacts, with clusters forming across multiple time intervals rather than concentrated bursts. This suggests a different approach to host discovery, possibly employing more selective or targeted scanning strategies.

Alpha Strike Labs and Shodan.io demonstrate sparser contact patterns, indicating either more selective scanning criteria or potentially smaller sensor networks. Their distributions show periodic clusters rather than continuous streams of new contacts.

CriminalIP presents the most minimal contact pattern, with occasional first contacts spread across the timeline. This could reflect a highly selective approach to host identification or a more focused scanning methodology.

The above graph also shows just how extensive some of the scanner fleets are (each dot is a single IP address making contact with one of the sensors; dot colors distinguish one sensor node from another).

If we take all that distinct data and whittle it down to count which benign scanners hit the most sensors first, we see that ONYPHE is the clear winner, followed by Censys — demonstrating strong but more focused scanning capabilities — with BinaryEdge coming in third.

The chart below digs a bit deeper into the first contact scenarios. We identified the very first contacts to each of the 24 sensor nodes from each benign scanner. ONYPHE shows a concentrated burst of activity in the 6-12 hour window, while Bitsight’s contacts are more evenly distributed throughout the observation period. Censys demonstrates a mixed pattern, with clusters in the early hours followed by sporadic contacts. ShadowServer exhibits a notably consistent spread of first contacts across multiple time windows.

BinaryEdge’s pattern suggests coordinated scanning activity, with tight groupings of contacts that could indicate automated discovery processes. Alpha Strike Labs shows a selective, possibly more targeted approach to first contact, while CriminalIP has minimal but distinct touchpoints. Shodan rounds out the observation set with periodic contacts that suggest a methodical scanning approach.

Speed Versus Reach

While speed is a critical competitive edge, coverage may be an even more important one. It’s fine to be the first to discover, but if you’re not making a comprehensive inventory, are you even scanning?

We counted up all the ports these benign scanners probed over the course of a week. Censys leads the pack with an impressive 36,056 ports scanned, followed by ShadowServer scanning 19,166 ports, and Alpha Strike Labs covering 14,876 ports.

ONYPHE, Shodan, and even both BinaryEdge and Bitsight seem to take similar approaches when it comes to probing for services on midrange and higher ports. All of them, save for CriminalIP, definitely know when you’ve been naughty and tried to hide some service outside traditional port ranges.

Before moving on to our last section, it is important to remind readers that we are only showing a 7-day view of activity. Some scanners, notably Censys, have much broader port coverage than a mere 55% of port space. The internet is a very tough environment to perform measurements in. Routes break, cables are cut, and even one small connection hiccup could mean a missed port hit. Plus, it’s not very nice to rapidly clobber a remote node that one is not responsible for.

Tag Time

The vast majority of benign contacts have no real payloads. Some of them do make checks for specific services or for the presence of certain weaknesses. When they do, the GreyNoise Global Observation Grid records a tag for that event. We wanted to see just how many tags these benign scanners sling our way.

Given ShadowServer’s mission, it makes sense that they’d be looking for far more weaknesses than the other benign scanners. The benign scanner organizations that also have an attack surface management (ASM) practice will also usually perform targeted secondary scans for customers who have signed up for such inspections.

In Conclusion

We hope folks enjoyed this second look at what benign scanners are up to and what their strategies seem to be when it comes to measuring the state of the internet.

If you have specific questions about the data or would like to see different views, please do not hesitate to contact us in our community Slack or via email at research@greynoise.io.

Methodology

Sensors were deployed between 2024-11-19 and 2024-11-26 (UTC) across five autonomous systems and in the IP space of the following countries:

- Croatia

- Estonia

- Ghana

- Kenya

- Luxembourg

- Norway

- Slovenia

- South Africa

- Sweden

The in-scope benign actors (based on total tag hits across all of November):

- Academy for Internet Research

- BLEXBot

- Alpha Strike Labs

- BinaryEdge.io

- BitSight

- Censys

- CriminalIP

- ONYPHE

- ShadowServer.org

- Shodan.io

Both Palo Alto’s Cortex Expanse and ByteSpider were in the original top ten, but were removed as candidates. Each of those services are prolific/noisy (one might even say “rude”), would have skewed the results, and made it impossible to compare the performance of these more traditional scanners. Furthermore, while ByteSpider may be (arguably) benign, it has more of a web crawling mission that differs from the intents of the services on the rest of the actor list.

We measured the inbound traffic from the in-scope benign actors for a 7-day period.

Unfortunately, neither Academy for Internet Research and BLEXBot reached out and touched these 24 new sensor nodes, therefore have no presence in the results.

.png)

.jpg)